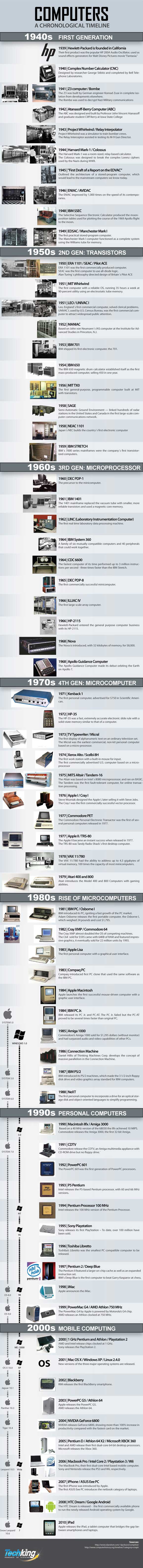

Lets sit back and think about what life was like before computers were everywhere. You actually had to send letters via the postal service, go to stores to buy things, and actually visit Uncle Larry at his house instead of at the prison where he is serving time for a hard drive full of illegal images. Any way, even though a mostly computerless world wasn’t that long ago it really is hard to explain to some of the younger generation what a pain it really was. So in honor of the great technology we use everyday I present to you ‘Computers – A Chronological Timeline’. Enjoy!

The history of computers begins thousands of years before modern electronic machines emerged, tracing back to ancient civilizations that created mechanical devices for calculation. The abacus, invented around 2400 BCE in Babylon, represents one of humanity’s earliest computing tools, enabling merchants and scholars to perform arithmetic operations through bead manipulation on rods. Ancient Greeks developed the Antikythera mechanism around 100 BCE, an astronomical calculator that predicted celestial positions with remarkable accuracy. These primitive devices demonstrated humanity’s enduring desire to automate calculation processes and extend mental capabilities through mechanical means.

Early civilizations recognized that complex calculations required tools beyond human memory and manual counting methods. These foundational instruments established principles that would guide computer development for millennia, particularly the concept of representing numbers through physical positions and automating repetitive calculations. The abacus evolved across different cultures, with Chinese, Japanese, and Roman variations each contributing unique innovations to calculation methodology. Just as ancient innovators sought efficiency through mechanical aids, modern professionals pursue project management excellence to optimize contemporary workflows.

Mechanical Computing Engines During the Enlightenment Era

The seventeenth and eighteenth centuries witnessed revolutionary advances in mechanical computing as scientists and inventors created machines capable of performing complex arithmetic automatically. Blaise Pascal invented the Pascaline in 1642, a mechanical calculator using gears and wheels to perform addition and subtraction without human intervention beyond initial input. Gottfried Wilhelm Leibniz improved upon Pascal’s design in 1673 with the stepped reckoner, incorporating multiplication and division capabilities through an ingenious cylinder mechanism. These machines represented quantum leaps in computation, transforming calculation from purely mental exercise to mechanically assisted process. The Enlightenment period’s emphasis on reason and scientific method catalyzed inventive approaches to automating intellectual work.

Charles Babbage revolutionized computing conceptually during the nineteenth century by designing the Difference Engine and later the Analytical Engine, machines that embodied principles underlying modern computers. Though never completed during his lifetime, Babbage’s Analytical Engine incorporated revolutionary concepts including programmable instructions, memory storage, and conditional branching. Ada Lovelace, working with Babbage, recognized the machine’s potential beyond pure calculation, essentially creating the first computer program. Modern cloud computing infrastructures, which define service boundaries and benefits through sophisticated agreements, continue the tradition of formalizing computational capabilities that Babbage and Lovelace pioneered. Their visionary work established conceptual frameworks that would guide computer development for the next century.

Electromechanical Systems and Early Automation Machines

The late nineteenth and early twentieth centuries brought electromechanical computing systems that combined electrical components with mechanical devices, creating more powerful and versatile calculation tools. Herman Hollerith developed punch card tabulating machines for the 1890 United States Census, dramatically reducing data processing time from years to months. His company eventually became International Business Machines, better known as IBM, which would dominate computing for decades. Punch cards became the standard method for data input and storage, remaining prevalent until the 1970s. These electromechanical systems demonstrated that electricity could enhance mechanical computation, enabling faster processing and greater complexity.

Konrad Zuse created the Z3 in 1941, widely considered the first programmable, fully automatic digital computer using electromechanical relays. Meanwhile, the Harvard Mark I, completed in 1944, represented another milestone in electromechanical computing with its ability to execute long computations automatically. These machines bridged mechanical and electronic computing eras, proving that automated calculation could handle previously impossible computational challenges. Organizations today leverage cloud computing applications that descend directly from these pioneering systems. The electromechanical period established computing as essential infrastructure for government, business, and scientific research, setting the stage for electronic revolution.

Electronic Computing Revolution and Vacuum Tube Technology

The 1940s witnessed the birth of electronic computing through machines replacing mechanical relays with vacuum tubes, dramatically increasing processing speeds. The Colossus computers, developed in Britain during World War II to break German codes, represented some of the first programmable electronic computers. ENIAC, completed in 1946 at the University of Pennsylvania, became the first general-purpose electronic computer, containing over seventeen thousand vacuum tubes and weighing thirty tons. These machines performed calculations thousands of times faster than electromechanical predecessors, revolutionizing scientific computation and military applications. Electronic components eliminated mechanical limitations, enabling computers to tackle previously unsolvable problems in cryptography, ballistics, and weather prediction.

UNIVAC I, delivered in 1951, became the first commercial computer available to business and government customers, marking computing’s transition from research laboratories to practical applications. These first-generation computers consumed enormous power, generated tremendous heat, and required constant maintenance as vacuum tubes frequently failed. Despite limitations, they demonstrated electronic computing’s transformative potential across industries. Understanding foundational computing architectures parallels comprehending cloud infrastructure design in contemporary contexts. The vacuum tube era established electronic computing as viable technology worthy of substantial investment and established the computing industry’s commercial potential.

Transistor Innovation and Second Generation Computing Systems

The invention of the transistor in 1947 by Bell Laboratories scientists John Bardeen, Walter Brattain, and William Shockley revolutionized electronics and enabled second-generation computers. Transistors replaced vacuum tubes, offering smaller size, lower power consumption, greater reliability, and reduced heat generation. By the late 1950s, transistorized computers began appearing, including the IBM 1401 and DEC PDP-1, machines that made computing accessible to more organizations. Transistors enabled computers to become smaller, faster, and more affordable, expanding computing beyond military and government applications into universities and larger corporations. This miniaturization trend would accelerate throughout computing history, continuously making machines more powerful and accessible.

Second-generation computers introduced high-level programming languages including FORTRAN, COBOL, and LISP, making programming more accessible to scientists and business professionals rather than exclusively hardware engineers. These languages abstracted away machine-level complexity, enabling programmers to focus on problem-solving rather than hardware intricacies. Magnetic core memory replaced slower drum memory, improving data access speeds significantly. Modern identity management solutions, particularly those involving SailPoint IAM implementations, build upon principles of access control established during this era. The transistor era democratized computing, transforming it from exotic technology accessible only to specialists into tool that diverse professionals could utilize.

Integrated Circuits and the Microprocessor Revolution

The development of integrated circuits in the early 1960s initiated third-generation computing, cramming multiple transistors onto single silicon chips. Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor independently developed integrated circuit technology, enabling exponential increases in computing power while reducing size and cost. IBM System/360, introduced in 1964, became the first major computer family using integrated circuits, offering compatibility across different models and establishing standardization concepts. Integrated circuits enabled computers to become significantly smaller, more reliable, and more powerful than transistor-based predecessors. This miniaturization enabled computing to penetrate new markets including smaller businesses and research institutions.

The microprocessor’s invention in 1971 by Intel engineer Ted Hoff revolutionized computing by placing entire central processing units on single chips. The Intel 4004, the first commercial microprocessor, contained 2,300 transistors and enabled creation of personal computers. Microprocessors democratized computing, making machines affordable for individuals and small organizations rather than exclusively large institutions. This innovation sparked the personal computer revolution that would transform society fundamentally. Security professionals pursuing CCSP certification mastery must understand computing evolution to appreciate cloud security challenges. Microprocessors enabled computing to transition from institutional resource to personal tool, fundamentally altering how humans interact with information technology.

Personal Computing Emergence and Consumer Market Expansion

The 1970s and early 1980s witnessed personal computing’s emergence as hobbyists and entrepreneurs created machines for individual ownership rather than institutional sharing. The Altair 8800, released in 1975, became the first commercially successful personal computer, sold primarily as a kit requiring assembly. Apple Computer, founded by Steve Jobs and Steve Wozniak, introduced the Apple II in 1977, offering a fully assembled computer with color graphics appealing to broader audiences beyond electronics enthusiasts. Commodore PET and Tandy TRS-80 also debuted in 1977, establishing personal computing as a viable consumer market. These machines brought computing into homes, schools, and small businesses, democratizing access to computational power.

IBM’s entry into personal computing with the IBM PC in 1981 legitimized the market and established architectural standards that dominated for decades. The IBM PC’s open architecture encouraged third-party hardware and software development, creating an ecosystem that accelerated innovation. Microsoft’s MS-DOS operating system, licensed to IBM, positioned Microsoft as the dominant software provider as the PC market exploded. Understanding identity verification methods like multi-factor authentication becomes essential as personal computing expands security challenges. Personal computing transformed computers from institutional tools into ubiquitous consumer devices, fundamentally altering work, education, and entertainment.

Graphical User Interfaces and Computing Accessibility

The development of graphical user interfaces during the 1980s transformed computing from text-based command-line systems into visually intuitive environments accessible to non-technical users. Xerox PARC pioneered GUI concepts with the Alto computer in the 1970s, introducing windows, icons, menus, and pointing devices. Apple commercialized GUI concepts with the Macintosh in 1984, featuring a mouse-driven interface that eliminated the need for command memorization. Microsoft followed with Windows, eventually dominating personal computing through successive versions. GUIs democratized computing by making machines accessible to people without programming knowledge, dramatically expanding potential user base beyond technical specialists.

GUI development paralleled mouse technology evolution, transforming how humans interacted with computers from keyboard-centric to pointing-device-driven interfaces. This interaction paradigm shift made computers intuitive for diverse applications including desktop publishing, graphic design, and multimedia production. The GUI revolution enabled computing to penetrate virtually every industry and aspect of modern life. Just as early systems required password authentication for security, modern interfaces balance usability with protection. Graphical interfaces represented crucial innovation making computing truly universal rather than specialized tool, establishing foundation for contemporary computing experiences.

Networking Foundations and Internet Protocol Development

Computer networking emerged during the 1960s and 1970s as researchers sought to connect machines for resource sharing and communication. ARPANET, developed by the United States Department of Defense Advanced Research Projects Agency, became operational in 1969, connecting computers at research institutions. TCP/IP protocols, developed by Vinton Cerf and Robert Kahn, established standardized communication methods enabling diverse networks to interconnect. These protocols formed the foundation for the Internet, which evolved from military and academic networks into global communication infrastructure. Networking transformed computers from standalone calculation devices into communication tools, fundamentally expanding their utility and importance.

The Internet’s commercialization during the 1990s revolutionized global communication, commerce, and information access. Tim Berners-Lee invented the World Wide Web in 1989, creating a hypertext system making the Internet accessible to non-technical users. Web browsers including Mosaic and Netscape Navigator popularized Internet access, sparking a dot-com boom as businesses recognized online opportunities. Network infrastructure components like network protection architecture became crucial as connectivity expanded. Networking transformed isolated computing devices into interconnected global systems, enabling collaboration, communication, and information sharing at unprecedented scales.

Mobile Computing and Wireless Communication Integration

Mobile computing emerged during the 1990s and 2000s as manufacturers created portable devices with computing capabilities comparable to desktop machines. Laptops evolved from bulky, expensive machines into lightweight, powerful computers suitable for mobile professionals. Personal digital assistants including Palm Pilot and BlackBerry devices introduced handheld computing for scheduling, communication, and information management. These devices demonstrated demand for computing untethered from desks, enabling productivity anywhere. Wireless networking technologies including Wi-Fi and cellular data enabled mobile devices to maintain Internet connectivity, eliminating dependency on wired connections. Mobile computing represented a paradigm shift from stationary to ubiquitous computing.

Smartphones, particularly the iPhone introduced in 2007, revolutionized mobile computing by combining phone, computer, and Internet access into a single pocket-sized device. Touchscreen interfaces eliminated physical keyboards, making devices more intuitive and versatile. App stores created ecosystems where developers could distribute software directly to consumers, spawning the mobile application economy. Understanding concepts like load balancing systems becomes important as mobile computing scales globally. The mobile revolution made computing truly ubiquitous, with billions of people accessing computational power and Internet connectivity through devices they carry constantly.

Cloud Computing Paradigm and Distributed Infrastructure

Cloud computing emerged during the 2000s as organizations recognized advantages of accessing computing resources remotely rather than maintaining local infrastructure. Amazon Web Services, launched in 2006, pioneered commercial cloud computing by offering scalable computing, storage, and networking resources on demand. Cloud computing enabled organizations to reduce capital expenditure on hardware while gaining flexibility to scale resources based on demand. This paradigm shifted computing from ownership model to service model, fundamentally altering IT economics. Cloud providers built massive data centers globally, offering computing power vastly exceeding what individual organizations could economically maintain.

Cloud computing encompasses infrastructure-as-a-service, platform-as-a-service, and software-as-a-service models, each offering different abstraction levels and management responsibilities. Organizations migrated applications, data, and entire infrastructures to cloud environments, benefiting from reliability, scalability, and reduced operational complexity. Proper cable management infrastructure remains essential even in cloud-centric environments. Cloud computing represents another democratization wave, enabling startups and small organizations to access enterprise-grade infrastructure previously available only to large corporations. This model continues evolving as hybrid and multi-cloud strategies gain prominence.

Artificial Intelligence Integration and Machine Learning Advancement

Artificial intelligence research, dating to computing’s earliest days, achieved practical applications during the 2010s as processing power and data availability reached critical thresholds. Machine learning algorithms, particularly deep neural networks, demonstrated capabilities in image recognition, natural language processing, and game playing that matched or exceeded human performance. Companies integrated AI into products and services, from recommendation systems to autonomous vehicles. GPU computing, originally developed for graphics rendering, proved ideal for parallel processing required by neural networks, accelerating AI adoption. Artificial intelligence transformed from academic pursuit into practical technology reshaping industries from healthcare to transportation.

AI assistants including Siri, Alexa, and Google Assistant brought artificial intelligence into everyday life, enabling voice-controlled computing for billions of users. Machine learning improved continuously as systems processed more data, creating a virtuous cycle of increasing capability. Organizations leveraged AI for automation, insight generation, and decision support across functions. Security professionals must adopt strategic cybersecurity mindsets to address AI-related challenges. Artificial intelligence represents computing’s frontier, with potential to automate intellectual work just as the industrial revolution mechanized physical labor, fundamentally transforming economy and society.

Quantum Computing Emergence and Future Computational Possibilities

Quantum computing represents computing’s next frontier, leveraging quantum mechanical phenomena to perform calculations impossible for classical computers. Unlike traditional bits representing zero or one, quantum bits exist in superposition of states, enabling quantum computers to explore multiple solutions simultaneously. Companies including IBM, Google, and startups invested heavily in quantum computing development, achieving milestones including quantum supremacy demonstrations. While practical applications remain limited, quantum computing promises revolutionary advances in cryptography, drug discovery, optimization, and simulation. Quantum computers could solve problems in minutes that would require classical computers millennia.

Quantum computing faces substantial challenges including maintaining quantum coherence and error correction, requiring extreme cooling and isolation from environmental interference. Despite obstacles, steady progress suggests quantum computing will eventually complement classical computing for specific problem domains. Organizations must prepare for quantum computing’s security implications, as quantum algorithms could break current encryption methods. Understanding identity and access management becomes crucial in the quantum computing era. Quantum computing represents profound advancement in computational capability, potentially enabling scientific breakthroughs currently beyond reach.

Cybersecurity Evolution and Digital Protection Mechanisms

As computing became ubiquitous and interconnected, cybersecurity emerged as critical discipline protecting systems, networks, and data from malicious actors. Early computers faced minimal security threats due to limited connectivity, but Internet proliferation created attack surfaces exploited by criminals, nation-states, and hacktivists. Cybersecurity evolved from simple password protection to sophisticated multi-layered defenses including firewalls, intrusion detection, encryption, and behavioral analysis. Organizations established security operations centers monitoring systems continuously for threats. Security became integral to computing rather than afterthought, influencing system design, development practices, and operational procedures.

The cybersecurity landscape continuously evolves as attackers develop new techniques and defenders respond with countermeasures. Ransomware, distributed denial of service attacks, and advanced persistent threats represent modern challenges requiring constant vigilance. Security professionals increasingly leverage bug bounty programs to identify vulnerabilities before malicious exploitation. Artificial intelligence enhances both offensive and defensive capabilities, creating a technological arms race. Cybersecurity importance will only increase as society’s dependence on computing deepens, making security expertise among the most valuable skills in the technology sector.

Open Source Movement and Collaborative Software Development

The open source movement transformed software development by creating collaborative ecosystems where programmers worldwide contribute to shared codebases. Richard Stallman founded the Free Software Foundation in 1985, establishing principles of software freedom and user rights. Linus Torvalds released the Linux kernel in 1991, demonstrating that distributed collaboration could create operating system rivaling commercial alternatives. Open source development challenged proprietary software models, proving that transparency and collaboration could produce high-quality, reliable software. Companies increasingly adopted open source software, reducing costs while benefiting from community-driven innovation and bug fixes.

Open source principles extended beyond software into hardware designs, educational resources, and data sets, creating commons of freely accessible knowledge and tools. GitHub and similar platforms facilitated collaboration, enabling millions of developers to contribute to projects globally. Open source software now powers much of Internet infrastructure, from web servers to databases to programming languages. Professionals securing systems must understand application security fundamentals across diverse platforms. The open source movement democratized software development, proving that collaborative models could challenge and often surpass traditional commercial approaches.

Information Security Standards and Compliance Frameworks

As organizations recognized cybersecurity’s importance, international standards emerged providing frameworks for implementing comprehensive security programs. ISO 27001, published in 2005, established requirements for information security management systems, becoming widely adopted globally. Organizations achieving ISO 27001 certification demonstrated systematic approach to managing sensitive information. Various industry-specific standards addressed unique sectoral needs, including PCI DSS for payment cards, HIPAA for healthcare, and SOC 2 for service providers. Standards provided blueprints for security programs, helping organizations implement controls systematically rather than haphazardly.

Compliance frameworks evolved alongside standards, establishing legal and regulatory requirements for data protection and privacy. GDPR in Europe and CCPA in California represented significant privacy regulations affecting organizations globally. Compliance became business imperative as violations resulted in substantial fines and reputational damage. Understanding ISO 27001 foundations helps organizations implement systematic security. Standards and compliance frameworks professionalized cybersecurity, transforming it from technical specialty into business-critical function requiring governance, measurement, and continuous improvement.

Data Analytics and Business Intelligence Transformation

Computing’s evolution enabled organizations to collect, store, and analyze unprecedented data volumes, creating data analytics and business intelligence disciplines. Early database systems provided structured data storage, but limited analytical capabilities. Data warehousing concepts emerged during the 1990s, consolidating organizational data for analysis. Business intelligence tools enabled non-technical users to query data, generate reports, and identify trends. Analytics transformed from IT specialty into business function, with executives making data-driven decisions rather than relying solely on intuition and experience.

Big data technologies including Hadoop and Spark enabled processing of massive datasets exceeding traditional database capabilities. Cloud platforms democratized analytics, providing scalable infrastructure without massive capital investment. Machine learning enhances analytics, identifying patterns humans might miss and generating predictive insights. Organizations leveraging GCP analytics capabilities gain competitive advantages through data-driven decision making. Analytics revolution transformed data from operational byproducts into strategic asset, with organizations competing on analytical sophistication and insight generation.

Cloud Migration Strategies and Infrastructure Modernization

Organizations increasingly migrated applications and infrastructure from on-premises data centers to cloud environments, pursuing benefits including scalability, reliability, and cost optimization. Cloud migration required careful planning, addressing application compatibility, data transfer, security, and organizational change management. Various migration strategies emerged, from simple lift-and-shift approaches moving applications unchanged to cloud-native redesigns optimizing for cloud environments. Organizations often pursued hybrid approaches, maintaining some systems on-premises while migrating others to cloud. Migration complexity varied dramatically based on application architecture, data sensitivity, and regulatory requirements.

Successful migrations required understanding cloud service models, selecting appropriate providers, and redesigning operations for cloud environments. Organizations developed cloud expertise through training, hiring, and partnering with consulting firms. Migration programs often spanned years, particularly for large enterprises with complex IT estates. Professionals understanding GCP migration fundamentals facilitate successful transitions. Cloud migration represented strategic transformation rather than simple technology change, requiring organizational commitment and cultural adaptation to new operational models.

Platform as a Service and Application Development Evolution

Platform-as-a-service offerings emerged as cloud providers recognized developers needed more than raw infrastructure, offering pre-configured environments for application development and deployment. PaaS abstracted infrastructure complexity, enabling developers to focus on application logic rather than server management, networking, and scaling. Google App Engine, Microsoft Azure App Services, and similar platforms provided managed environments supporting various programming languages and frameworks. PaaS accelerated development cycles, reduced operational overhead, and enabled smaller teams to build and maintain sophisticated applications. This model particularly appealed to startups and enterprises seeking development efficiency.

PaaS evolution included serverless computing, further abstracting infrastructure by executing code in response to events without requiring server provisioning. Serverless enabled extreme scalability and cost efficiency, charging only for actual compute time rather than reserved capacity. Developers embraced PaaS and serverless for appropriate workloads, though some applications remained better suited for infrastructure-as-a-service or on-premises deployment. Understanding Google Cloud Platform foundations helps developers leverage modern cloud capabilities. PaaS represented another abstraction layer in computing’s evolution, continuously making development more accessible and efficient.

Site Reliability Engineering and Operations Excellence

Site reliability engineering emerged as a discipline combining software engineering and systems administration to build and maintain reliable, scalable systems. Google popularized SRE concepts, emphasizing automation, monitoring, and engineering approaches to operations. SRE teams measured reliability through service-level objectives and error budgets, balancing reliability against feature velocity. This approach transformed operations from reactive firefighting into proactive engineering, preventing problems rather than merely responding to incidents. SRE principles spread across industry as organizations recognized traditional operations approaches couldn’t scale to modern system complexity.

SRE emphasizes observability, ensuring systems provide data necessary for understanding behavior and diagnosing issues. Automation eliminates repetitive tasks, reducing human error while enabling operations teams to focus on strategic improvements. Incident response processes formalize handling of outages and degradations, ensuring systematic problem resolution and learning from failures. Organizations implementing SRE operational excellence achieve higher reliability while maintaining innovation velocity. SRE represents maturation of operations practices, applying engineering rigor to reliability challenges presented by distributed systems and continuous deployment.

Avaya Communication Platforms and Enterprise Telephony Systems

Modern enterprises rely on sophisticated communication platforms integrating voice, video, and messaging into unified systems supporting global organizations. Avaya emerged as major communication infrastructure provider, offering solutions ranging from small business phone systems to massive enterprise deployments. These platforms evolved from traditional private branch exchange systems into software-defined communication infrastructures leveraging Internet Protocol technologies. Organizations benefit from features including automated call distribution, interactive voice response, and integration with customer relationship management systems. Communication platforms became strategic infrastructure enabling customer service, internal collaboration, and business operations.

Session initiation protocol became standard for initiating and managing communication sessions, enabling interoperability between different vendors’ systems. Communication platforms increasingly moved to cloud deployments, reducing on-premises hardware requirements while providing flexibility and scalability. Organizations pursuing unified communications integrated telephony with email, instant messaging, and video conferencing into single platforms accessible across devices. Professional expertise in Avaya core implementations demonstrates specialized communication infrastructure knowledge. Enterprise communication evolution mirrors broader computing trends toward integration, cloud delivery, and user experience optimization.

Collaboration Server Infrastructure and Unified Communications

Collaboration servers provide backbone for modern organizational communication, hosting services including presence information, instant messaging, and video conferencing. These systems enable real-time communication regardless of participant location, supporting distributed workforce models. Collaboration platforms integrate with productivity applications, enabling seamless transitions between communication and work artifacts. Organizations standardized on collaboration platforms to reduce communication fragmentation and improve productivity. Features including screen sharing, whiteboarding, and recording transformed collaboration beyond simple conversation into rich multimedia experiences. Collaboration infrastructure became essential as remote work proliferated, replacing in-person interactions with digital equivalents.

Security considerations for collaboration platforms include encryption for communication privacy, access controls limiting platform usage to authorized users, and data retention policies complying with regulations. Organizations balance collaboration benefits against security and compliance requirements. High-availability designs ensure collaboration services remain accessible even during infrastructure failures. Skills in Avaya collaboration systems demonstrate expertise in enterprise communication platforms. Collaboration infrastructure investment reflects recognition that effective communication directly impacts organizational productivity, employee satisfaction, and business outcomes.

Middleware Solutions and Application Integration Architecture

Middleware platforms provide connectivity and integration between diverse applications, enabling data flow and process coordination across organizational systems. These solutions abstract integration complexity, providing pre-built connectors, transformation capabilities, and workflow orchestration. Organizations with heterogeneous application portfolios rely on middleware to create cohesive technology ecosystems from disparate systems. Integration platforms evolved from simple point-to-point connections to sophisticated service-oriented architectures and microservices implementations. Middleware enables organizations to leverage existing system investments while adding new capabilities, avoiding costly system replacements. Integration architecture became critical as organizations pursued digital transformation initiatives requiring coordination across multiple systems.

Modern middleware includes API management platforms enabling organizations to expose and consume services securely. Integration platform as a service offerings deliver middleware capabilities through cloud models, reducing infrastructure management burden. Real-time integration capabilities enable event-driven architectures responding immediately to business events. Understanding Avaya middleware technologies demonstrates integration expertise. Middleware represents a crucial layer in enterprise architecture, enabling agility and integration that monolithic systems cannot provide. Organizations increasingly view integration capabilities as competitive advantage, enabling faster adaptation to market changes.

Voice Over IP Infrastructure and Network Telephony

Voice over IP technologies transformed telephony by transmitting voice communications over data networks rather than dedicated circuit-switched telephone networks. VoIP reduced communication costs, particularly for long-distance and international calls, while enabling advanced features impossible with traditional telephony. Organizations migrated from costly private branch exchange systems to IP-based communication platforms offering greater flexibility. VoIP quality depends on network performance, requiring quality of service mechanisms prioritizing voice traffic over less time-sensitive data. Codec selection balances voice quality against bandwidth consumption, with various standards addressing different requirements. VoIP proliferation required network infrastructure upgrades ensuring sufficient bandwidth and appropriate latency characteristics.

Security challenges for VoIP include eavesdropping, denial of service attacks, and toll fraud exploiting improperly secured systems. Encryption protocols including Secure Real-time Transport Protocol protect voice communications from interception. VoIP monitoring tools ensure quality remains acceptable, identifying network issues before they impact users. Expertise in Avaya VoIP systems demonstrates specialized telephony knowledge. VoIP represents communication infrastructure convergence with data networking, enabling organizations to manage unified networks rather than separate voice and data infrastructures. This convergence continues with unified communications integrating additional communication modalities.

Apple Ecosystem and Consumer Computing Integration

Apple created a distinctive computing ecosystem integrating hardware, software, and services into cohesive user experience differentiating it from competitors. Beginning with Macintosh personal computers, Apple expanded into mobile devices with iPhone and iPad, wearables with Apple Watch, and services including iCloud and Apple Music. The ecosystem’s integration enables seamless experiences across devices, with features like Handoff allowing users to begin tasks on one device and continue on another. Apple’s controlled ecosystem approach contrasts with more open platforms, prioritizing user experience consistency and security. Organizations increasingly support Apple devices as employees prefer iOS and macOS despite enterprise IT historically standardizing on Windows.

Apple’s enterprise adoption required mobile device management solutions enabling IT departments to configure and secure Apple devices. iOS security architecture, including app sandboxing and hardware-backed encryption, appeals to security-conscious organizations. Developer tools including Xcode and Swift programming language enable creation of applications for Apple platforms. Professionals pursuing Apple platform expertise gain valuable skills given the ecosystem’s market presence. Apple’s influence extends beyond devices to shaping user experience expectations and privacy standards across the technology industry. The ecosystem demonstrates that integrated approaches can compete successfully against more open alternatives.

Real Estate Appraisal Systems and Property Valuation Technology

Real estate industry adopted computing technology for property valuation, market analysis, and transaction management. Appraisal software automates aspects of property valuation, incorporating comparable sales data, market trends, and property characteristics. Geographic information systems enable spatial analysis relevant to property values, considering factors including location, proximity to amenities, and neighborhood characteristics. Automated valuation models use statistical techniques and machine learning to estimate property values, though professional appraisers remain necessary for official valuations. Technology improved appraisal efficiency, consistency, and documentation while expanding analytical capabilities beyond manual methods.

Cloud-based appraisal platforms enable collaboration between appraisers, lenders, and other stakeholders while maintaining secure data management. Mobile applications allow appraisers to collect property data and photographs on-site, immediately synchronizing with office systems. Integration with multiple listing services and public records automates data gathering previously requiring manual research. Expertise in property valuation platforms demonstrates specialized domain knowledge. Real estate technology adoption illustrates how specialized industries leverage computing for process improvement and enhanced decision-making. Industry-specific platforms provide capabilities that general-purpose software cannot address effectively.

Pharmaceutical Supply Chain and Distribution Management Systems

Pharmaceutical industry requires sophisticated supply chain systems ensuring drug safety, regulatory compliance, and efficient distribution. Track-and-trace systems monitor pharmaceutical products from manufacturing through delivery to patients, combating counterfeiting and enabling rapid recall if safety issues emerge. Serialization assigns unique identifiers to individual drug packages, creating detailed visibility throughout supply chains. Temperature monitoring ensures medications requiring refrigeration remain within acceptable ranges throughout distribution. Regulatory requirements including Drug Supply Chain Security Act mandate specific tracking and verification capabilities. Pharmaceutical supply chain complexity requires specialized software addressing unique industry requirements.

Integration between manufacturing systems, distribution networks, pharmacy systems, and regulatory reporting creates comprehensive pharmaceutical supply chain visibility. Blockchain technologies promise enhanced traceability and authentication for pharmaceutical products. Analytics identify supply chain inefficiencies and predict demand, optimizing inventory levels and distribution. Professional knowledge of pharmaceutical supply systems demonstrates specialized expertise. Pharmaceutical computing applications illustrate how regulated industries require purpose-built systems addressing compliance, safety, and operational requirements simultaneously. Technology enables pharmaceutical companies to meet regulatory obligations while optimizing operations.

Service-Oriented Architecture and Enterprise System Design

Service-oriented architecture emerged as enterprise design pattern organizing functionality as interoperable services accessible through standardized interfaces. SOA enables organizations to build applications by composing services, promoting reusability and flexibility. Services encapsulate specific business capabilities, exposing functionality through well-defined contracts independent of underlying implementation. Enterprise service buses provide infrastructure for service communication, handling routing, transformation, and orchestration. SOA addresses organizational challenges including application integration, business process automation, and legacy system modernization. This architectural approach enables agility by allowing applications to evolve through service composition rather than monolithic redevelopment.

SOA governance ensures services remain consistent, discoverable, and properly managed across enterprise. Service registries catalog available services, facilitating discovery and reuse. Organizations measure SOA success through metrics including service reuse rates and time-to-market for new capabilities. Microservices architectures evolved from SOA principles, emphasizing smaller, more focused services deployed independently. Understanding service architecture principles demonstrates enterprise design expertise. SOA represents significant evolution in enterprise architecture thinking, moving from monolithic applications toward composable, flexible system landscapes. Organizations continue applying these principles as architectures evolve toward cloud-native patterns.

Support Center Operations and Technical Service Delivery

Technical support centers provide critical customer service for technology products and services, requiring sophisticated systems for case management, knowledge management, and communication. Ticketing systems track support requests from initial contact through resolution, ensuring accountability and enabling performance measurement. Knowledge bases enable support agents to access solutions to common problems, improving resolution speed and consistency. Omnichannel support platforms integrate phone, email, chat, and social media interactions into unified agent interfaces. Organizations measure support center performance through metrics including first contact resolution, average handling time, and customer satisfaction scores.

Remote support tools enable agents to access customer systems directly, diagnosing and resolving issues without requiring on-site visits. Chatbots and artificial intelligence augment human agents, handling routine inquiries while escalating complex issues. Workforce management systems optimize agent scheduling, balancing service levels against operational costs. Expertise in support center technologies demonstrates customer service platform knowledge. Support center technology investments reflect recognition that customer service quality directly impacts satisfaction, retention, and brand perception. Organizations continuously seek efficiency improvements while maintaining service quality.

Video Surveillance Infrastructure and Security Monitoring Systems

Video surveillance evolved from analog camera systems to sophisticated Internet Protocol camera networks with advanced analytics capabilities. Modern surveillance systems provide high-definition video, remote access, and integration with access control and alarm systems. Network video recorders replace traditional digital video recorders, offering greater flexibility and scalability. Video analytics including motion detection, facial recognition, and behavior analysis automate monitoring, alerting security personnel to suspicious activities. Cloud storage options enable long-term video retention without on-premises storage infrastructure. Surveillance systems balance security benefits against privacy concerns, requiring appropriate policies and controls.

Integration between surveillance and other security systems creates comprehensive security operations centers monitoring multiple information sources simultaneously. Cybersecurity considerations for surveillance systems include network segmentation isolating camera networks, encryption for video streams, and access controls limiting video access to authorized personnel. Bandwidth requirements for high-definition video influence network design decisions. Professional certification in video surveillance platforms demonstrates specialized security technology expertise. Surveillance system sophistication reflects increasing emphasis on physical security and the role technology plays in safety and loss prevention programs.

Applied Behavior Analysis Data Systems and Treatment Tracking

Applied behavior analysis practitioners use specialized software for treatment planning, data collection, and progress monitoring for individuals with developmental disabilities. These systems enable therapists to define treatment goals, record behavioral observations, and analyze intervention effectiveness. Mobile applications facilitate real-time data collection during therapy sessions, replacing paper-based recording. Data visualization helps clinicians and families understand progress over time. Integration between clinical and administrative systems streamlines scheduling, billing, and reporting. Technology improves treatment quality by enabling more frequent measurement and data-driven intervention adjustments.

Regulatory compliance requirements including HIPAA mandate appropriate security and privacy controls for behavioral health data. Cloud platforms enable multi-site organizations to standardize treatment approaches while sharing best practices. Analytics identify treatment patterns associated with better outcomes, advancing evidence-based practice. Expertise in behavioral health systems demonstrates specialized clinical technology knowledge. Applied behavior analysis software illustrates how specialized professional fields develop purpose-built systems addressing unique requirements that general healthcare systems don’t adequately address.

Clinical Behavior Analysis and Professional Practice Management

Board certified behavior analysts rely on practice management systems coordinating clinical care, documentation, and business operations. These platforms manage client demographics, treatment authorizations, session scheduling, and outcome tracking. Documentation templates ensure behavioral intervention plans contain required elements while reducing clinician documentation burden. Billing integration connects clinical documentation with insurance claim generation, ensuring services are properly reimbursed. Compliance features help practices adhere to funding source requirements and professional standards. Technology enables behavior analysts to maintain smaller administrative staffs while serving more clients.

Telehealth capabilities expanded during recent years, enabling remote behavioral consultations and parent training. Outcome measurement systems demonstrate treatment effectiveness to funding sources and families. Interoperability with electronic health records enables coordination with other healthcare providers serving shared clients. Understanding clinical practice platforms demonstrates behavioral healthcare technology expertise. Practice management systems for specialized clinical fields must balance general healthcare requirements with profession-specific workflows and documentation standards. Technology investment enables clinical practices to operate more efficiently while improving care quality.

Project Management Methodologies and Implementation Frameworks

PRINCE2 established structured project management methodology widely adopted globally, particularly in government and large organizations. The framework defines specific project roles, processes, and documentation standards ensuring consistent project execution. Organizations adopt PRINCE2 to improve project success rates, reduce risks, and ensure stakeholder alignment. Certification in PRINCE2 demonstrates understanding of structured project management approaches. The methodology emphasizes business justification, defined organization structures, and product-based planning. PRINCE2’s prescriptive nature provides clarity but may feel bureaucratic for smaller projects or organizations valuing agility.

Tailoring PRINCE2 to organizational context balances methodology benefits against administrative overhead. Integration between PRINCE2 processes and organizational governance ensures projects align with strategic objectives. Project management tools support PRINCE2 implementation by automating documentation and workflow. Professionals pursuing PRINCE2 foundations gain internationally recognized qualifications. PRINCE2 represents a structured approach to project management, contrasting with more adaptive methodologies like agile. Organizations often blend methodologies, selecting approaches appropriate for specific project characteristics and organizational culture.

Software Testing Standards and Quality Assurance Certification

International Software Testing Qualifications Board established globally recognized software testing certification scheme professionalizing quality assurance practices. ISTQB certifications demonstrate testing knowledge spanning test design techniques, test management, and specialized testing domains. Organizations employing certified testers benefit from standardized testing approaches and common terminology. Certification levels progress from foundation through advanced to expert, enabling testers to demonstrate increasing expertise. Software quality directly impacts user satisfaction, system reliability, and organizational reputation, making effective testing essential. Structured testing approaches reduce defects reaching production while optimizing testing efficiency.

Test automation tools enable regression testing at scale, ensuring software changes don’t introduce new defects. Continuous integration pipelines incorporate automated testing, providing rapid feedback to developers. Specialized testing including performance, security, and usability requires specific expertise and tools beyond functional testing. Pursuing software testing certification demonstrates quality assurance professionalism. Software testing evolution mirrors broader software development trends toward automation, integration with development processes, and continuous quality verification. Organizations increasingly recognize testing as specialized discipline requiring trained professionals rather than secondary developer responsibility.

Requirements Engineering and Systems Analysis Practices

Requirements engineering establishes foundation for successful software development by systematically identifying, documenting, and managing stakeholder needs. Poor requirements contribute significantly to project failures, making effective requirements practices critical for success. Requirements elicitation techniques including interviews, workshops, and observation gather information from diverse stakeholders. Requirements documentation balances completeness with understandability, ensuring all parties share common understanding. Requirements management tracks changes throughout project lifecycles, maintaining traceability between requirements and implementation. Specialized tools support requirements engineering, providing capabilities beyond simple documents.

Agile methodologies approach requirements differently from traditional approaches, emphasizing user stories and continuous refinement over comprehensive upfront specification. However, fundamental requirements engineering principles remain relevant regardless of development methodology. Requirements validation ensures documented requirements accurately reflect stakeholder needs before significant development investment occurs. Professional expertise in requirements engineering demonstrates systematic analysis capabilities. Requirements engineering represents critical bridge between business needs and technical implementation, requiring both domain understanding and technical knowledge. Organizations recognizing requirements quality’s importance invest in requirements engineering training and tools.

Data Center Infrastructure and Registered Communications Design

Data center design requires specialized expertise balancing power, cooling, networking, and physical security considerations. Registered Communications Distribution Designer certification demonstrates knowledge of telecommunications infrastructure design principles. Modern data centers consume enormous power while generating tremendous heat, requiring sophisticated cooling systems. Network topology decisions impact performance, redundancy, and scalability. Physical security controls protect expensive equipment and sensitive data from unauthorized access. Modular data center designs enable incremental expansion as organizational needs grow. Emerging edge computing architectures distribute processing closer to data sources, requiring new design approaches beyond centralized data centers.

Data center efficiency metrics including Power Usage Effectiveness measure how effectively facilities convert power into useful computing versus overhead including cooling. Sustainability concerns drive data center operators toward renewable energy and improved cooling efficiency. Software-defined data centers abstract physical infrastructure through virtualization, enabling programmatic resource allocation. Understanding communication distribution design demonstrates infrastructure planning expertise. Data center infrastructure represents foundational layer supporting cloud computing, enterprise systems, and Internet services. Organizations increasingly outsource data center operations to specialized providers while maintaining hybrid approaches for specific workloads.

Mobile Device Management and Enterprise Mobility Support

Organizations supporting mobile workforces require mobile device management platforms configuring, securing, and monitoring smartphones and tablets. MDM solutions enforce security policies including password requirements, encryption, and application restrictions. Remote wipe capabilities protect organizational data if devices are lost or stolen. Application management distributes enterprise applications while preventing installation of unapproved software. Organizations balance security requirements against employee privacy expectations, particularly for personally owned devices used for work. Containerization separates work data from personal information on shared devices. Mobile device proliferation created new support challenges requiring specialized expertise.

BlackBerry historically dominated the enterprise mobile market before the iPhone transformed the industry. Despite market share decline, BlackBerry’s security focus maintained a niche in security-conscious organizations and government. Understanding BlackBerry support systems demonstrates historical mobile platform knowledge. Modern MDM platforms support diverse operating systems including iOS, Android, and Windows. Unified endpoint management extends MDM concepts to include desktops and laptops alongside mobile devices. Organizations pursue zero-trust security models eliminating assumptions about device trustworthiness based solely on network location. Mobile security represents an ongoing challenge as devices access organizational resources from uncontrolled networks and locations.

Blockchain Foundations and Distributed Ledger Technologies

Blockchain technology enables decentralized record-keeping without trusted intermediaries through cryptographic techniques and distributed consensus. Bitcoin demonstrated blockchain’s potential for digital currency, but applications extend far beyond cryptocurrency. Distributed ledgers provide tamper-resistant transaction histories useful for supply chain tracking, digital identity, and smart contracts. Organizations explore blockchain for use cases requiring transparency, immutability, and multi-party coordination without central authority. Blockchain’s distributed nature eliminates single points of failure while enabling parties without established trust relationships to transact. However, blockchain’s energy consumption, scalability limitations, and regulatory uncertainty temper adoption enthusiasm.

Private blockchain implementations sacrifice some decentralization for improved performance and control appropriate for enterprise use cases. Smart contracts encode business logic executing automatically when conditions are met, potentially automating agreements and reducing intermediary costs. Blockchain intersects with other technologies including Internet of Things for device coordination and supply chain for provenance tracking. Understanding blockchain business foundations demonstrates emerging technology knowledge. While blockchain hype exceeded near-term reality, distributed ledger concepts continue influencing system design. Organizations evaluate blockchain thoughtfully, recognizing its strengths for specific use cases while acknowledging limitations.

IP Office Platform Architecture and Deployment Strategies

IP Office platforms provide communication infrastructure for small and medium businesses, offering enterprise-grade capabilities at appropriate scale and cost. These systems integrate voice, video, messaging, and collaboration into unified platforms. Deployment options include on-premises hardware, virtualized implementations, and cloud-hosted services enabling organizations to select approaches matching their requirements and capabilities. IP Office scales from small single-site deployments to distributed organizations with multiple locations. System resilience features including redundancy and automatic failover ensure communication availability even during component failures. Organizations migrate from legacy phone systems to IP platforms seeking advanced features and operational cost reductions.

IP Office integrates with contact center applications, enabling sophisticated customer service capabilities including automated call distribution and skills-based routing. Mobile applications extend communication features to smartphones, enabling employees to maintain a single business identity across devices. APIs enable custom integrations connecting communication with business applications. Expertise in IP Office implementations demonstrates communication platform proficiency. IP-based communication represents infrastructure convergence, unifying previously separate voice and data networks. Organizations benefit from simplified management, reduced costs, and enhanced capabilities compared to traditional telephone systems.

Network Infrastructure Management and Operations Excellence

Modern networks require continuous monitoring, management, and optimization ensuring performance, security, and reliability. Network management systems provide visibility into network device status, traffic patterns, and potential issues. Configuration management maintains consistent settings across network devices while tracking changes over time. Performance monitoring identifies bottlenecks and capacity constraints before they impact users. Automation capabilities enable rapid deployment of configuration changes across multiple devices simultaneously. Network operations centers monitor infrastructure continuously, responding to alerts and coordinating incident resolution. Effective network management balances proactive monitoring with rapid incident response.

Software-defined networking separates the network control plane from the data plane, enabling programmatic network management through centralized controllers. Network automation reduces manual configuration errors while enabling rapid response to changing requirements. Intent-based networking allows administrators to specify desired outcomes rather than detailed configurations, with systems automatically implementing appropriate settings. Understanding network management practices demonstrates operational expertise. Network infrastructure evolution toward automation and software-defined approaches transforms network operations from manual device configuration toward orchestration and policy management. Organizations seek network professionals combining traditional networking knowledge with programming and automation skills.

Audiovisual Systems Integration and Meeting Room Technology

Organizations invest in audiovisual systems enhancing meeting effectiveness through video conferencing, digital signage, and presentation technologies. Certified Technology Specialist credential demonstrates audiovisual design and implementation expertise. Modern meeting rooms incorporate cameras, microphones, displays, and control systems enabling seamless collaboration between local and remote participants. Integration between audiovisual systems and collaboration platforms ensures consistent user experiences across different spaces. Organizations standardize meeting room technologies, reducing user confusion and support requirements. Wireless presentation systems eliminate cable complexity while enabling any participant to share content easily.

Digital signage communicates information throughout facilities, from corporate messaging to wayfinding and emergency notifications. Video walls create immersive experiences for lobbies, control rooms, and presentation spaces. Audiovisual systems increasingly leverage network infrastructure, converging with IT systems. Pursuing audiovisual certification demonstrates specialized integration knowledge. Audiovisual technology evolution mirrors broader computing trends toward network-based, software-managed systems. Organizations recognize effective meeting technology directly impacts collaboration quality, particularly as remote work increases. Investment in meeting room technology enables productive hybrid work models supporting distributed teams.

Network Virtualization and Software-Defined Infrastructure

Network virtualization abstracts network services from underlying physical infrastructure, enabling flexible, programmable network architectures. Virtual networks operate independently atop shared physical infrastructure, providing isolation while optimizing resource utilization. Software-defined wide area networks optimize connectivity between distributed locations, dynamically routing traffic based on application requirements and link quality. Network function virtualization replaces dedicated hardware appliances with software implementations running on standard servers. These technologies enable organizations to deploy network services more rapidly while reducing hardware costs. Cloud providers extensively use network virtualization, enabling multitenant isolation and flexible network topologies.

Overlay networks create logical topologies independent of physical network structure, simplifying complex network designs. Automation capabilities enable rapid provisioning of network services matching application deployment velocity. Security benefits include microsegmentation isolating workloads and limiting lateral movement during security incidents. Understanding network virtualization technologies demonstrates advanced networking knowledge. Software-defined approaches transform networking from hardware-centric to software-driven discipline, requiring network professionals to develop programming and automation skills. Organizations adopting network virtualization gain agility and efficiency while managing infrastructure complexity.

Axis Communications and Network Video Solutions

Network video technology transformed surveillance from analog closed-circuit systems to Internet Protocol cameras with advanced analytics. Axis Communications pioneered network camera development, establishing standards adopted broadly across industry. Network cameras provide superior image quality compared to analog predecessors while enabling digital storage and remote access. Power over Ethernet simplifies camera installation by delivering power and data through single cables. Camera analytics including motion detection, people counting, and license plate recognition automate monitoring tasks previously requiring continuous human observation. Integration with video management platforms enables centralized monitoring across multiple locations.

Edge processing capabilities enable cameras to perform analytics locally, reducing bandwidth requirements and enabling operation even if network connectivity fails. High-definition and even 4K resolution cameras provide image detail supporting forensic investigation and facial recognition. Cybersecurity considerations for network cameras include firmware updates, secure configuration, and network segmentation isolating camera networks. Expertise in network video systems demonstrates surveillance technology knowledge. Network video represents convergence of physical security with information technology, requiring security professionals to understand both disciplines. Organizations increasingly leverage video analytics for purposes beyond security, including operational intelligence and customer behavior analysis.

Assistant Behavior Analysis and Certification Pathways

Board certified assistant behavior analysts provide behavioral services under supervision of board certified behavior analysts, implementing treatment plans and collecting data. Training programs combine academic coursework with supervised practical experience ensuring competence before independent practice. Certification demonstrates minimum competency standards, protecting clients receiving behavioral services. Supervisory relationships enable assistant behavior analysts to develop skills while maintaining quality oversight. Career progression pathways from assistant to full behavior analyst provide structured professional development. Demand for behavioral services exceeds supply of qualified professionals, creating strong employment prospects for certified practitioners.

Continuing education requirements ensure practitioners remain current with evolving best practices and research. Professional organizations provide resources, networking opportunities, and advocacy for behavior analysis profession. Technology increasingly supports service delivery through telehealth and data management platforms. Understanding assistant behavior certification demonstrates behavioral health professional knowledge. Applied behavior analysis represents evidence-based approach to behavior change with applications spanning developmental disabilities, mental health, education, and organizational performance. Professional certification elevates practice standards while protecting consumers from unqualified practitioners.

Board Certified Behavior Analyst Advanced Practice

Board certified behavior analysts represent the highest level of independent practice in the applied behavior analysis field. Certification requires substantial academic preparation including master’s degree or doctorate, extensive supervised experience, and passing comprehensive examination. BCBAs design behavioral interventions, supervise implementation, and evaluate treatment effectiveness. Professional responsibilities include maintaining ethical practice, contributing to evidence base through research, and supervising assistant behavior analysts and technicians. Demand for qualified behavior analysts continues growing as behavioral intervention recognition expands across educational, clinical, and organizational settings.

Specializations within behavior analysis include verbal behavior, organizational behavior management, clinical behavior analysis, and experimental analysis of behavior. Technology tools support assessment, intervention planning, data analysis, and professional collaboration. Professional development includes conferences, journals, and specialized training addressing specific populations or intervention approaches. Pursuing behavior analyst certification represents significant professional commitment with corresponding career opportunities. Applied behavior analysis represents empirical approach to understanding and changing behavior, with applications extending far beyond developmental disabilities to include performance improvement in diverse contexts.

Software Testing Qualifications and International Standards

American Software Testing Qualifications Board provides United States implementation of international software testing certification scheme. Standardized testing knowledge enables consistent quality assurance practices across organizations and projects. Certification levels include foundation, advanced, and expert, enabling testers to demonstrate progressive expertise. Specialized modules address specific testing domains including test automation, agile testing, and security testing. Organizations employing certified testers benefit from common terminology and shared understanding of testing principles. Professional certification differentiates qualified testers in competitive job markets while providing structured learning paths for career development.

Testing automation increasingly supplements manual testing, requiring testers to develop programming and tool expertise. Agile methodologies integrate testing throughout development rather than treating it as separate phase, changing tester roles and required skills. Performance testing ensures applications meet responsiveness and scalability requirements under expected loads. Pursuing software testing credentials demonstrates quality assurance professionalism. Software testing evolution reflects broader software development trends toward continuous integration, automation, and quality-focused cultures. Organizations recognize testing’s critical role in delivering reliable software that meets user expectations.

Forensic Consulting and Digital Investigation Methodologies

Digital forensics involves identifying, preserving, analyzing, and presenting electronic evidence for legal proceedings and incident investigation. Forensic consultants assist organizations investigating security breaches, employee misconduct, intellectual property theft, and regulatory violations. Specialized tools extract and analyze data from computers, mobile devices, networks, and cloud services. Chain of custody procedures ensure evidence integrity and admissibility in legal proceedings. Forensic analysis techniques recover deleted files, identify unauthorized access, and reconstruct event timelines. Organizations facing incidents require forensic expertise determining breach scope, identifying responsible parties, and preventing future occurrences.

Certifications demonstrate forensic investigation competence and adherence to professional standards. Cloud computing complicates forensics as evidence resides in provider infrastructure rather than organizationally controlled systems. Mobile device proliferation requires specialized tools and techniques addressing unique challenges including device variety and encryption. Understanding forensic consulting practices demonstrates investigative expertise. Digital forensics represents specialized discipline combining technical skills, legal knowledge, and investigative techniques. Organizations increasingly need forensic capabilities as cyber incidents proliferate and regulatory requirements mandate breach investigations and reporting.

Project Management Foundations and Professional Certification

Project management establishes structured approaches to planning, executing, and controlling initiatives delivering specific outcomes. PRINCE2 foundation certification demonstrates understanding of widely adopted project management methodology. Projects represent temporary endeavors with defined objectives, distinguishing them from ongoing operations. Effective project management increases success likelihood while reducing risks. Structured methodologies provide frameworks ensuring consistent project execution across organizations. Project managers coordinate resources, manage stakeholder expectations, and overcome obstacles impeding project progress. Organizations benefit from project management professionalism through improved delivery predictability and stakeholder satisfaction.

Project management tools support scheduling, resource allocation, collaboration, and reporting. Agile project management emphasizes iterative delivery and continuous stakeholder engagement rather than comprehensive upfront planning. Portfolio management prioritizes projects based on strategic alignment and resource availability. Pursuing project management certification demonstrates professional commitment. Project management represents essential organizational capability enabling strategic initiative execution. Technology projects particularly benefit from structured management approaches given their complexity and potential for scope expansion. Organizations increasingly recognize project management as a distinct profession requiring specific skills and knowledge.

Software Testing Specialization and Advanced Practices

Advanced software testing addresses complex quality assurance challenges including integration testing, system testing, and acceptance testing. Specialized testing techniques ensure software meets functional requirements while performing adequately under expected loads. Test design techniques including equivalence partitioning, boundary value analysis, and decision tables optimize test coverage while managing test case volumes. Risk-based testing prioritizes effort on high-risk areas where defects cause greatest impact. Exploratory testing complements scripted testing by leveraging tester creativity identifying unexpected issues. Testing throughout the development lifecycle catches defects earlier when fixes cost less.

Test data management ensures adequate test scenarios without exposing sensitive production data during testing. Testing environments replicate production configurations enabling realistic quality verification before deployment. Metrics including defect density and test coverage inform quality assessment and release decisions. Understanding advanced testing practices demonstrates quality assurance expertise. Software testing continues evolving as development practices change and systems become more complex. Organizations investing in testing capabilities reduce production defects, improve user satisfaction, and protect reputation. Effective testing balances thoroughness against project timelines and resource constraints.

PRINCE2 Practitioner and Advanced Project Management

PRINCE2 Practitioner certification demonstrates ability to apply project management methodology to real projects within organizational contexts. Advanced certification requires passing scenarios-based examination assessing tailoring and application skills beyond foundational knowledge. Practitioners adapt PRINCE2 principles to specific project contexts rather than rigidly applying prescriptive processes. Tailoring considers project size, complexity, risk, and organizational maturity when determining appropriate management approaches. Experienced practitioners balance methodology benefits against administrative overhead, maintaining necessary rigor without bureaucratic burden. Integration between project management and broader organizational governance ensures project outcomes align with strategic objectives.

Lessons learned processes capture project experiences, continuously improving organizational project management capabilities. Benefits realization management ensures projects deliver intended value beyond merely completing deliverables on schedule and budget. Stakeholder engagement strategies maintain support and manage expectations throughout project lifecycles. Pursuing PRINCE2 practitioner level demonstrates advanced project management proficiency. Mature project management practices contribute significantly to organizational success, particularly for organizations executing numerous complex initiatives simultaneously. Project management represents essential business discipline transcending specific industries or technical domains.

Conclusion: